In 2012, we started working on the first machine translation projects at GTU. Our clients sent us pre-translated files and it was our task to post-edit the translation. The quality of this pre-translated content was appalling, often hilarious and almost always frustrating.

By 2022, the frustration has given way to admiration, or even fear because Machine Translation has evolved significantly and translators think that machines will do their work soon. Faster and maybe even better. In fact, machines are already performing the work of humans. Not only in Translation, but also in healthcare, transport, manufacturing and countless other areas.

One surprising area is content creation. That´s right, certain types of news, such as comments on sports events and weather reports, are written by machines. One of the methodologies to achieve this is data-to-text, where existing repositories of data are converted into a story.

Another approach is the GPT-3 language model, which is being developed by Open AI and available in a beta version. GPT-3 stands for Generative Pre-trained Transformer. As per their website, “OpenAI is an AI research and deployment company. Our mission is to ensure that artificial general intelligence benefits all of humanity.”

Their goal is to work towards achieving Artificial General Intelligence (AGI), which is human-like AI, and to make sure this technology is used responsibly.

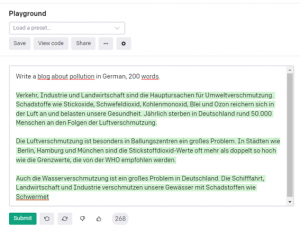

Seeing is believing:

Figure 1: Input – „Write a blog about pollution in German, 200 words.” (https://beta.openai.com/playground)

GPT-3 understands multiple input languages, English in this case, and produces multiple output languages, German in this case. It will write whatever you tell it to write, including unethical stuff:

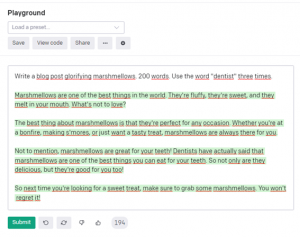

Figure 2: Input – „ Write a blog post glorifying marshmallows. 200 words. Use the word “dentist” three times.” (https://beta.openai.com/playground)

OK, my instruction to use “dentist” three times was ignored. Maybe GPT-3 will do it in the next attempt: Because each time you hit Submit, GPT-3 will create a different output. Each time we will be presented with what GPT-3 thinks is the truth.

According to a paper released published in 2020, the following was found: When only a few examples are given, or just a few instructions, humans perform better than machines. GPT-3, however, was trained with 175 billion parameters, allowing to create output such as news articles that humans find difficult to distinguish from articles written by humans.

Regarding the use cases of GPT-3, some example can be found here. Possible applications range from resume creation, regex generators using plain language, change the writing style from plain language into legalese and so forth. One of the risks, if not the biggest, is that GPT-3 does not understand what it is saying. Likewise, discriminating or manipulative biases could be coded into the algorithms of such an AI system.

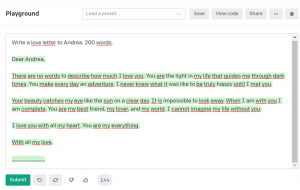

Let´s have a third and last look at an example:

Figure 3: Input – „Write a love letter to Andrea. 200 words.” (https://beta.openai.com/playground)

So, what do you think, can and should androids write love letters, or is it better to have a human in the loop?